Big Data in the e-commerce

11 July 2018

What’s new and noteworthy in WordPress?

18 July 2018

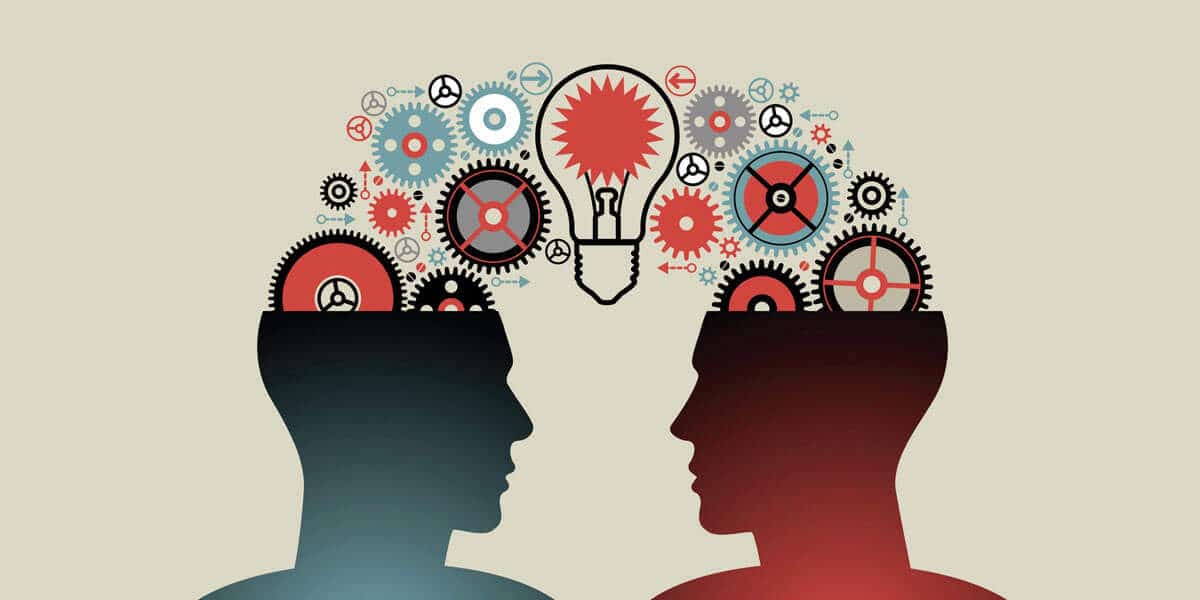

Learning Transfer is a process used for reusing the previously prepared model for a new problem. The method is extremely popular nowadays in the field of Deep Learning since it permits training neural networks from relatively small amounts of data. This can turn out to be pretty useful since most real-world problems usually do not have millions of tagged data points to train such complex models.

Transfer learning – what is it?

In transfer learning, knowledge of the already trained machine learning model is applied to another but related problem. For instance, if you have trained a simple classifier to predict whether a picture contains a backpack, you could actually use the knowledge the model gained during its training process in identifying other objects like sunglasses. Thanks to this, we are basically trying to use what we have learned in one task to improve generalization in another. We transfer how much the network has learned about task A to a new task B.

This means using knowledge the model has learned from the task in which a lot of labelled training data is available and transferring it to a new task in which we do not have too much data. The learning process does not start all over again but with the patterns learned from the related task.

This is not exactly a Machine Learning technique. Transfer Learning can be seen as a “design methodology” as a part of machine learning, for example in active learning. It is also not the exclusive part or field of machine learning. Nevertheless, it came into fashion with neural networks because they need huge amounts of data and computing power.

Transfer learning – design methodology

In image recognition, neural networks usually try to detect edges in primary layers, shapes in the middle layer, and some task-specific functions in the last layers. Thanks to the “transfer learning”, you use the primary and middle layers, and only then do you re-train these last layers. That helps us use data marked by the task label on which we were trained initially.

In Transfer Learning, we try to transfer as much knowledge as possible from the previous task on which the model was trained to the new task. This knowledge can take different forms depending on the problem and data. For example, this might be the way that models are composed, which will let us identify new objects more easily.

Pros and cons

Transfer Learning has a lot of advantages. The main advantages, in essence, are that you use less training time and your neural network works better in most cases, while you don’t need a lot of data. You can train a good machine learning model on relatively small training data because the model is already pre-trained. This might be particularly important for NLP, where expert knowledge is needed to create large labelled data sets.

It is rather difficult to come up with rules of general application in machine learning. Normally, you use transfer learning when you don’t have enough annotated training data to train your network from scratch, and/or there is already a network previously trained on some similar task, usually on huge amounts of data. Another case where it is applicable is when tasks A and B use the same source data.

If it was trained by TensorFlow, you can just restore it and re-create some of its layers for your task. Note that Transfer Learning works only if the functions learned in the first task are generic, i.e., useful in other related tasks as well.