Real-time sign language detection

6 November 2020

E-commerce store product page

20 November 2020

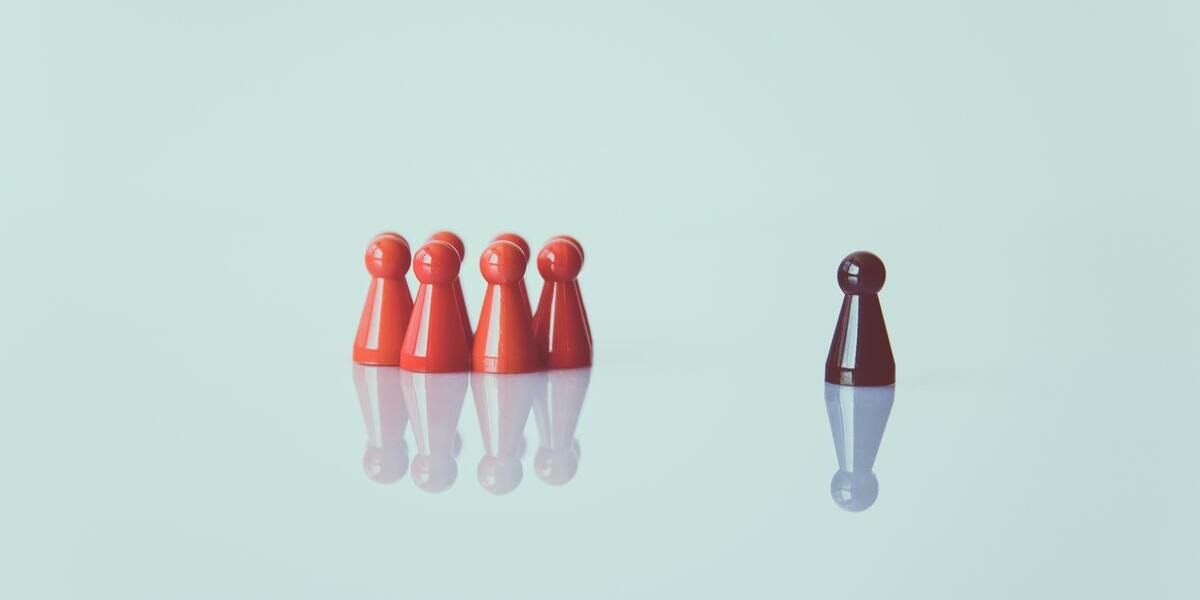

Ensemble methods are predictive models that combine predictions from two or more other models. Team learning methods are popular and are used when the best result of a predictive modeling project is the most important.

However, they are not always the most appropriate technique to use, and beginners in the field of applied machine learning expect teams or a specific team method are always the best method to use. They offer two specific advantages in the predictive modeling project and it is important to know what these benefits are and how to measure them to ensure that the use of the team is the right decision for the project.

Ensemble Learning

This is a machine learning model that combines forecasts from two or more models. The team models, called team members, can be of the same type or different types and can be learned or not from the same training data. Predictions made by team members can be combined by statistics such as mode or average, or by more sophisticated methods which learn how to trust each member and under what conditions.

Collaborative research really took up momentum in the 1990s., when articles about the most popular and common methods were published. At the end of the 21st century, the teams were partially accepted because of their enormous success in machine-learning competitions, such as the Netflix Award and subsequent competitions at Kaggle. Team’s methods significantly increase the cost and complexity of calculations. This increase is due to the expertise and time needed to train and maintain multiple models rather than a single model.

Why should we consider using team methods?

There are two main reasons why you should use team methods instead of one model and they are associated.

- Performance – the team can make better forecasts and deliver better results than any single component model.

- Solidity- the team limits the spread or dispersion of model forecasts and performance.

Team’s methods are used to achieve better predictive results in case of a predictive modeling problem than a single predictive model. The way this is achieved can be understood as a model which reduces the component variance of the variance error by adding a load. There is another important and less discussed benefit of teamwork, which is improved resistance or reliability of the average performance of the model. These are both important problems with your machine learning design and sometimes you can prefer one or both of the properties of the model.

Improve endurance

In a predictive modeling project, we often assess many modeling models or streams and choose the one that works well or better as our ultimate model. The algorithm or pipe is then matched to all available data and used to forecast new data.

The average accuracy or model error is a summary of the expected results, while in fact some models were working better and some models were working worse on different data subsets. Looking at the minimum and maximum performance results of the model gives you an idea of the worst and best performance you can expect from the model, and this may not be acceptable for the application.

The simplest team is to match the model multiple times to learning data sets and combine forecasts using summary statistics, such as the regression average or classification mode. Importantly, each model needs to be slightly different due to the stochistic learning algorithm, the differences in the composition of the learning data set, or the differences in the model itself.

This reduces the spread of projections made by the model. Average performance will probably be around the same, although the worst and best results will be close to average performance. As a result, it smoothes the model’s expected performance. The team method may, but may not, improve modeling performance for any individual member, which will be discussed in more detail, but at least should reduce the spread in the model’s average performance.

Deviation, variance and assemblies

Machine learning models for classification and regression learn mapping functions from input to output data. This mapping is taught based on examples from the problem domain, the learning data set, and is evaluated on data that is not used in the learning process, a test data set.

Errors made by the machine learning model are often described by two characteristics: deviation and variance. Deviation is a measure of how close the model can capture the projection function between input and output data. Takes into account the stiffness of the model: the assumption force that the model has about the functional form of the input and output data.

Model variance is the number of performance changes that the model will change when it matches different training data. Takes into account the impact of data specificities on the model. The variation and variance of model performance are linked. Reduction of deviation can often be easily achieved by increasing variance. Conversely, the reduction of variance can easily be achieved by increasing the deviation. Using team methods to reduce the variance of predictive errors results in a key benefit of using tables to improve predictive performance.

Improve performance

Decreasing the forecast error variance element improves prediction performance. We clearly use ensemble learning to find better predictive results, such as a smaller regression error or a high classification accuracy. This is the main application of learning methods in teams and the benefits shown by the use of kits by most of the winners of machine-learning competitions.

The team used in this way should only be adopted if it achieves on average better results than any other team member. If this is not the case, use a supporting element that works better instead. It is possible, or even common, that the performance of the team will not be better than the best team member at the time. This can happen if the team has one best model and the other members do not offer any benefits, or the team is unable to make effective use of their contributions.

It is also possible that the team will fall worse than the best team member. This is also common for any best performing model whose forecasts are affected by one or more of the poor performance of the other models and the team is unable to make effective use of their contributions.

Therefore, it is important to test a set of team methods and fine-tune their behavior, as we do with each individual machine learning model.