FAQ – should I have a question page?

30 October 2020

What are the advantages of ensemble methods in machine learning?

13 November 2020

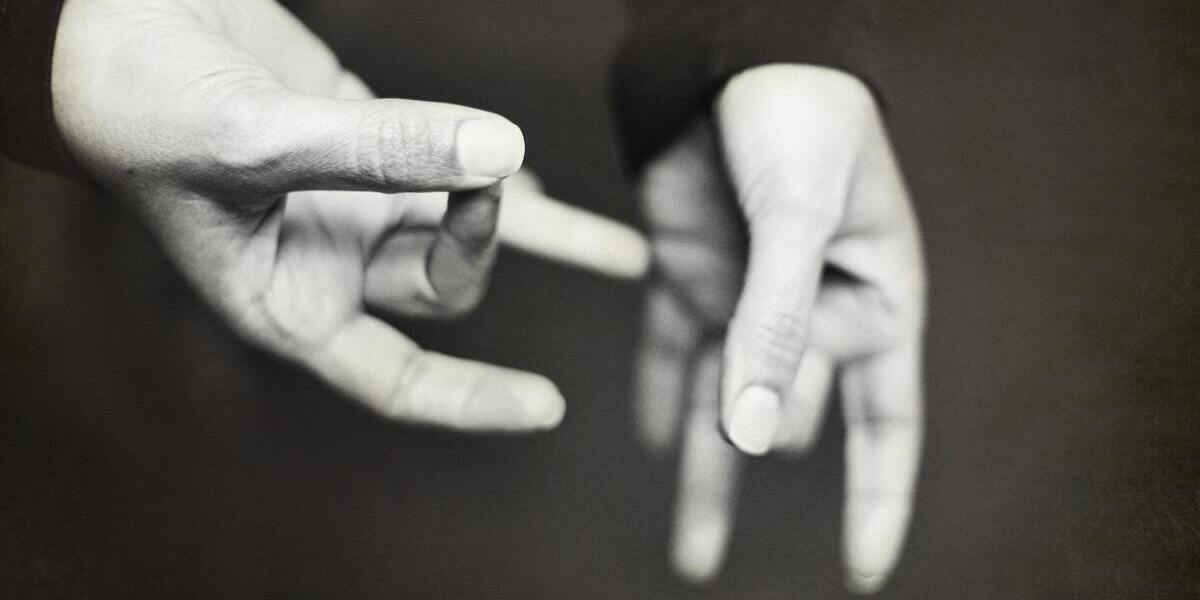

Based on https://ai.googleblog.com/2020/10/developing-real-time-automatic-sign.html

In this day and age where many companies are using video conferencing, it makes sense to make it available to everyone. Including people who communicate with the world through sign language. Most video conferencing apps show an image of those people who are speaking at that moment. Therefore, sign language users have no way to break through to be on screen. Enabling real-time sign language detection in video conferencing is difficult because applications need to perform classification using a high-volume video source as input, which makes the task computationally challenging. In part because of these challenges, research on sign language detection is limited.

Google presents a model for real-time sign language detection and shows how it can be used to provide video conferencing systems and a mechanism for identifying a person as an active speaker.

Google model – PoseNet

To enable a real-time solution for different video conferencing applications, Google has designed a lightweight model that is easy to use and can be used on a regular laptop. Previous attempts to integrate models into customer-facing video conferencing applications have shown the importance of a lightweight model that uses fewer CPU cycles to minimize the impact on call quality. To reduce input dimensions, Google extracted the information that the model needs to classify each frame from the video.

Since sign language includes the body and hands of the user, Google starts with the model of estimation of pox, PoseNet. This greatly reduces input from the entire HD image to a small set of landmarks on the user’s body, including eyes, nose, shoulders, hands, etc. PoseNet uses these landmarks to calculate the optical flow between frames, which quantifies the user’s movement for use by the model without preserving user-specific information. Each position is standardized based on the width of the person’s arms to ensure that the model is operated by a person signing within a certain distance from the apparatus. The optical flow is then normalized by the number of video frames prior to transfer to the model.

To test this approach, Google has used the German sign language body (DGS), which contains long videos of signing people and includes scope annotations that indicate which frames are signed. A linear regression model was developed as a reference to predict when a person signs using optical flow data. This baseline reached approximately 80% accuracy using only ~ 3 μs (0.000003 seconds) of processing time per frame. Given the optical flow of the 50 previous frames as the context of the linear model, it is able to reach 83.4%.

To generalize context usage, the long-term Memory Architecture (LSTM) is used, which contains memory from previous time stages but without retrospection. Using a single layer LSTM and then a linear layer, the model achieves the accuracy of up to 91.5% at 3.5 ms (0.0035 seconds) of processing time per frame.

Describe a functioning model

When Google had a functioning sign language detection model, they invented a way to use it to trigger the active speaker function in video conferencing applications. A lightweight, real-time web presentation has been developed that detects the sign language, which connects with various videoconferencing applications and can set the user as the “speaker” when he signs it. This demo uses PoseNet to quickly estimate human position and sign language detection models, enabling reliable real-time operation.

When the sign language detection model determines that the user signs, it forwards the ultrasonic tone of the audio through a virtual audio cable that can be detected by any video-conferencing application. The audio is transmitted at 20 kHz, which is normally outside the audible range for people. Because video conferencing applications typically detect the “volume” of sound like a speech, not just detect speech, the app thinks you are speaking.

Google believes that videoconferencing applications should be accessible to everyone. They showed how the model could be used to allow people using sign language to use video conferencing more conveniently.

Sign language recognition is a difficult issue if we consider all possible combinations of gestures that such a system must understand and translate. With this in mind, probably the best way to solve this problem is to divide it into simpler problems, and the system presented here would correspond to a possible solution for one of them.

The system did not work well, but it was shown that it could be built as a first-person sign language translation system using only cameras and neural networks. The next step is to analyze the solution and explore ways to improve the system. Some improvements can result in collecting more high-quality data, trying out more woven neural network architectures, or redesigning a video system.