WordPress Tide – better coding in WordPress

13 June 2018

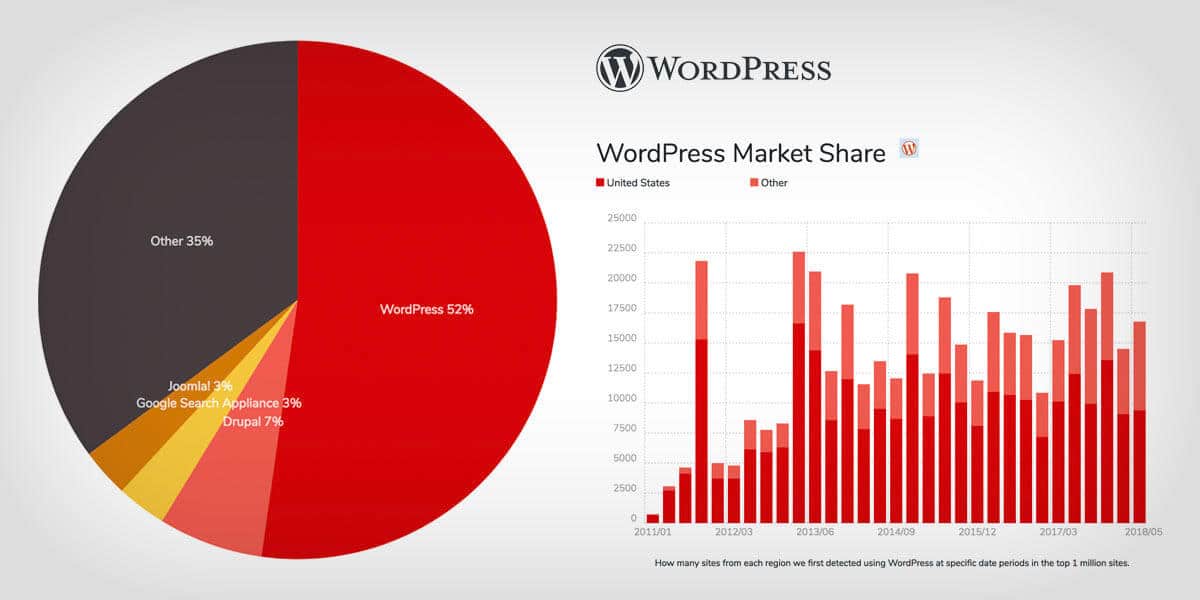

WordPress implemented in 30% of websites. What makes it so popular?

22 June 2018

The relationship between JavaScript and SEO began a long time ago and since then has been a frequent topic of discussion in the virtual world, mainly in the SEO circles. Creating websites using JavaScript was a big hit in a few days. Many programmers have used this technique with little knowledge about whether search engines can analyze and understand the content.

In the meantime, Google has changed its JavaScript methodology and position. Everyone began to doubt whether search engines could index JavaScript. And that was the wrong question. The better question is whether search engines analyze and understand the content rendered by Javascript? In other words, can Google rank your site if it’s JavaScript?

Javascript in brief

JavaScript is one of the most popular programming languages for creating websites. It uses frameworks to create interactive websites and controlling the behaviour of various elements on the site.

Initially, JS structures were implemented on the client side (front-end) only in browsers. Now the code is embedded in the host software, such as network servers (back-end) and databases, which saves a lot of trouble. Problems begin when the implementation of JavaScript is based only on rendering on the client side.

If the JavaScript framework has server-side rendering, the problem is solved before it even appears. To better understand why problems arise and how to avoid them, it is important to have a basic knowledge of how search engines work.

Search engine operation – crawl, indexing and ranking

When we talk about JavaScript and search engine optimization, we need to look at the first two processes: crawl and indexing. We will deal with ranking later.

The crawl phase is exploration. The process is very complicated and uses programs called spiders (or crawlers). Googlebot is perhaps the most popular crawler.

Googlebot plays a major role in the crawl process. However, it is different in indexing, where the Caffeine program is the most important. This phase involves analyzing the URL and understanding the content and its significance. The indexer also tries to render pages and run JavaScript.

After completing this stage without any errors in Search Console, the ranking process should begin. At this point, the webmaster and SEO experts must put effort into offering high-quality content, optimizing the website, acquiring and building valuable links in accordance with Google’s quality guidelines.

Javascript influence on SEO

JavaScript means faster server loading (code functions work immediately instead of waiting for server response), easier implementation, richer interfaces and greater versatility (can be used in a huge number of applications). But SEO in JavaScript brings some problems along the way. Many webmasters do not optimize content using JavaScript code.

The JS website is indexed and ranked. We now know that the best way is to help Google understand the generated content. To help Google rank content that uses JavaScript, you need tools and plug-ins to make it SEO-friendly. When we make our content easy to discover and easy to evaluate, we’ll get a higher SERP ranking.

Even if JavaScript has some limitations, and Google has some problems with it, most of them are due to improper implementation, not Google’s inability to deal with JavaScript.

How can JS be SEO-friendly?

In 2015, Google withdrew its AJAX crawler system and much has changed. Technical guidelines for webmasters show that Googlebot is not blocked for searching JS or CSS files and can render and interpret websites.

Google had other problems that needed to be resolved. Some webmasters using the JS platform had web servers that displayed a pre-rendered page, which normally should not happen. Pages for pre-rendering should follow the guidelines and have benefits for the user. Otherwise, it is very important that content sent to Googlebot matches the content displayed to the user, both in terms of appearance and how they interact. Basically, when Googlebot crawls a page, it should see the same content that the user sees. Having different content means masking and is against the Google quality guidelines.

The improvement guidelines say that the best way to build a site’s structure is to use only HTML and then connect to AJAX for the look and feel of the page. In this case, you’re insured because Googlebot will see the HTML and the user will benefit from the AJAX look.

So when Google indexes a website, it reads the templates, not the data. Therefore, it is necessary to write codes to the server, which will send the version of the page (without JavaScript) to Google. In the JavaScript rendered by the client, links have always been a problem because we never knew if Google could track links to get access to content.

JavaScript and SEO is a complicated discussion with many gaps and misunderstandings. Once and for all, they require further dialogue and clarification of the subject. Using JavaScript in a friendly relationship with SEO requires developers knowledge about the difference between Googlebot and Caffeine. JavaScript has many advantages, but friendliness for SEO is not one of them and sometimes it can be difficult to achieve.