Angular, React or Vue? What to choose in 2019?

23 March 2019

Agile positioning techniques using data analysis and SEO PR.

9 April 2019

Computers are getting better at generating photos, including photos of people. The development of artificial intelligence is increasing at a surprising pace. Recently, machine learning has become capable of generating and modifying images and videos. The face generated by artificial intelligence uses neural networks to create fake images.

Fake faces

In 2014, machine learning researcher Ian Goodfellow introduced the idea of generative adversarial networks (GAN). The developers used GAN to create everything from graphics to dental crowns.

GANs consist of 2 neural networks, generator and discriminator that compete with each other to minimize or maximize a specific function. The discriminator retrieves real data training, as well as generated (fake) data from the generator and must derive the probability that each image is true. His aim is to maximizing the number of correctly classifying data that it provides, while the generator tries to minimize this number. He’s trying to the discriminator classified the generated result as real by making less correct discriminator.

A particular type of GAN that has been built is the deeply converged generative adversarial network (DCGAN), which generates fake fakes. The basic idea is that both networks are built using a convolutional layering structure where object maps or kernels scan a specific part of the image for key features. This is done by matrix multiplication as each pixel in an image can be represented as a number. The pixel group values are multiplied by a matrix, allowing the network to learn important features such as edges, shapes, and lines that are present in that group.

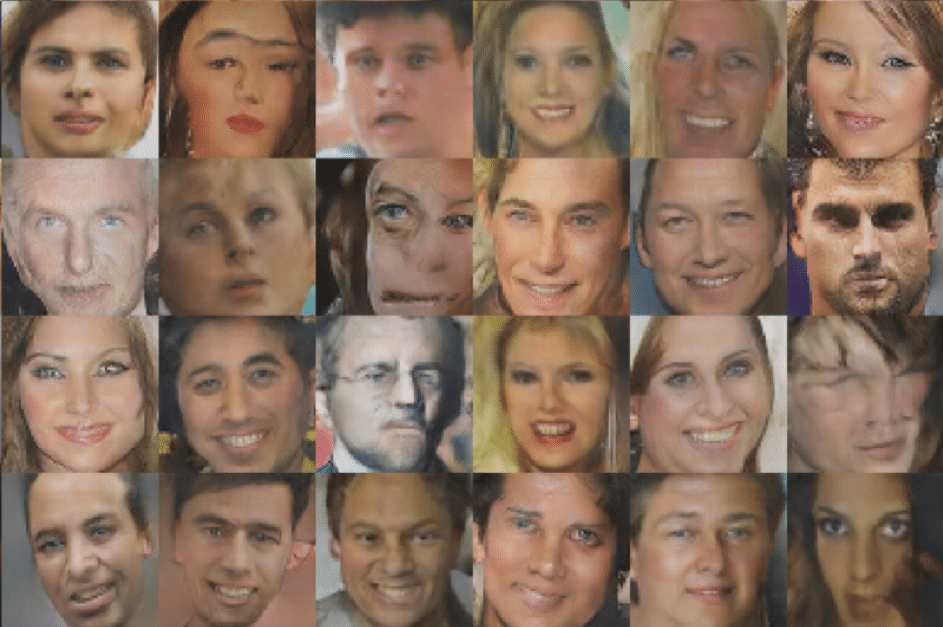

GAN efficiency is often associated with realistic results. What started four years ago as tiny, blurry images in shades of gray of human faces has evolved into full-color portraits. The first GAN images were easily identifiable by humans.

Examples of faces generated by GAN, published in October 2017, are more difficult to identify.

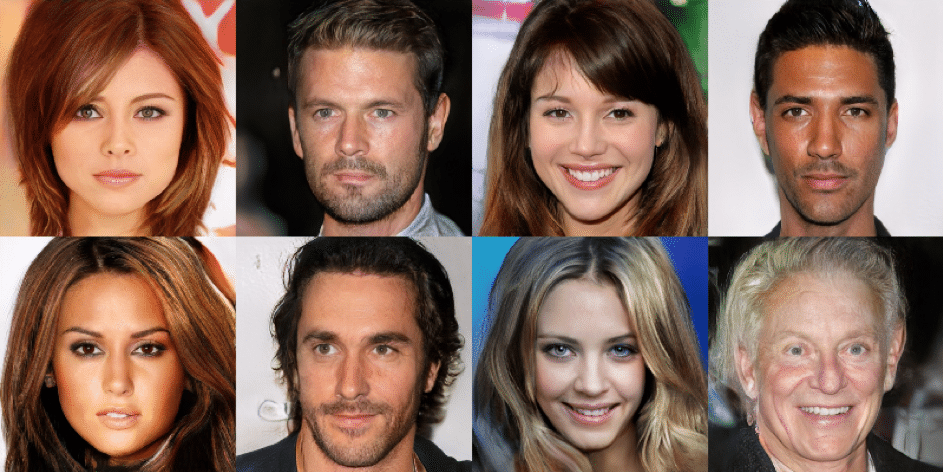

GAN technology has been modified by a well-known company graphics and AI – Nvidia, to create a high-quality database of thousands pictures of people, all computer-generated. First, the network generators learn constant input from photographs of a real person. this face is used as a reference and encoded as a latent-mapped vector space describing all the features in the image. Researchers have trained their own computer for 70,000 photos of real people from Flickr, which included various age, ethnic and image groups.

Wykorzystując te obrazy jako podstawę, komputer był w stanie uczyć się i segmentować aspekty różnych osób – takich jak kolor włosów, kształt twarzy lub kolor skóry – i generować zupełnie nowe obrazy. Technologia ta jest również w stanie wykrywać i reprodukować akcesoria takie jak okulary, okulary przeciwsłoneczne lub czapki i tworzyć nieskończoną liczbę obrazów zupełnie nowych osób.

Generative adversarial networks usually need to be trained to produce one category of images at a time, such as faces or cars. BigGAN was trained on a giant database of 14 million diverse images from the Internet, spanning thousands of categories, in an effort that required hundreds of specialized machine learning processors. This wide experience of the visual world means that the software can synthesize many different types of highly realistic images.

False moves

In 2018, scientists and artists moved AI-created and enhanced visuals to another level. Scroll through these examples to see like software that can make images, video and art power new forms of entertainment – as well as disinformation.

Oprogramowanie opracowane w UC Berkeley może przenosić ruchy jednej osoby, przechwyconej na wideo, na inną. Proces rozpoczyna się od dwóch klipów źródłowych – jednego przedstawiającego ruch do przeniesienia, a drugiego przedstawiającego próbkę osoby, która ma zostać przekształcona. Jedna część oprogramowania wyodrębnia pozycje ciała z obu klipów; inny uczy się, jak stworzyć realistyczny obraz przedmiotu dla dowolnej pozycji ciała. Może następnie wygenerować wideo obiektu, wykonującego mniej lub więcej dowolnych ruchów. W początkowej wersji system potrzebuje 20 minut wideo wejściowego, zanim będzie mógł mapować nowe ruchy na swoje ciało.

The end result is similar to a trick often used in Hollywood. Superheroes, aliens and monkeys in the movies are animated by placing markers on the faces and bodies of the actors, so they can be tracked in 3D using special cameras. The Berkeley project suggests that machine learning algorithms can significantly increase the availability of these production values.

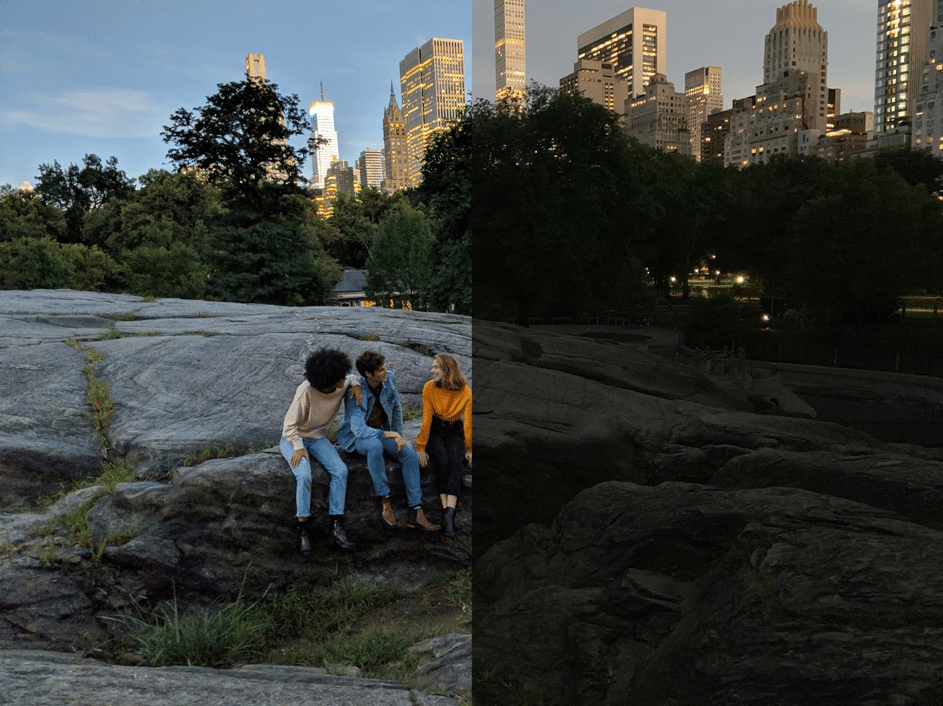

Night vision

AI-enabled images have become so practical that you can carry them in your pocket. The Night Sight feature on Google’s Pixel phones, launched in October, uses a set of algorithmic tricks to turn day into night. One is to combine multiple photos to create each final image; comparing them allows the software to identify and remove random noise, which is more of a problem in low light. The clean composite image that results from this process is further enhanced with the help of machine learning. Google engineers trained the software to fix the lighting and color of photos taken at night, using a collection of dark images combined with versions corrected by photo experts.

Experts sound the alarm

As GANs become more sophisticated, senior US and UK politicians are expressing concern about the threat posed by fakes to spread misinformation or cause conflict. Researchers are struggling to create new algorithms to detect video and photo spoofing as technology becomes too sophisticated for the human eye.

Experts have been alarming for several years about how a trick with artificial intelligence can affect society. These tools can be used for disinformation and propaganda and can undermine public trust in pictorial evidence, which can harm both the judiciary and politics. These warnings should not be ignored. First, the ability to generate faces has received a lot of attention in the AI community.

There are also serious limitations when it comes to knowledge and time. Researchers at Nvidia had to train their model on eight Tesla GPUs for a week to create these faces.

Fortunately, experts are also looking at new ways to authenticate digital images. Some solutions have already been launched, such as camera apps that stamp photos with geocodes to verify when and where they were taken.